7 Must-Have Pre-Commit Hooks for Data Science Projects

A first step towards quality code

In the culinary world of data science, having a well-organized and efficient project is akin to preparing a gourmet meal. Just as a chef selects quality ingredients and follows a meticulous recipe, a data scientist must ensure their codebase is clean, efficient, and “error-free” before serving it up to the world. This is where pre-commit, a powerful tool in the data science toolkit, comes into play. Let's dive into the recipe for a smoother, more reliable data science workflow using pre-commit.

Understanding Pre-Commit

Pre-commit is a framework for managing and maintaining multi-language pre-commit hooks. Think of it as a sous-chef that checks your work before it leaves the kitchen, ensuring everything is in perfect order. Pre-commit hooks are automated checks that run on your code just before you commit changes to your version control system, such as Git. These hooks can include code formatting, syntax errors detection, and more, helping to catch and fix common issues early in the development process.

Why Do We Need It?

In data science, even minor mistakes can lead to significant errors in analysis and results. Pre-commit hooks help maintain code quality, improve readability, and ensure consistency across the project, saving time and headaches in the long run. They enforce a discipline of checking code standards and errors before making a commit, ensuring that your project's master branch remains pristine and deployable at any time.

Setup Pre-Commit in a Project

Installation: Begin by installing pre-commit globally using pip:

pip install pre-commitSetting Up Pre-Commit in Your Project: Navigate to your project directory and create a

.pre-commit-config.yamlfile. This file will specify which hooks pre-commit will use.!touch .pre-commit-config.yamlWe can also start by using a sample configuration:

pre-commit sample-config > .pre-commit-config.yamlDefine Hooks: Customize your configuration file to include hooks for tasks such as linting and code formatting.

7 Essential Pre-Commit Hooks for Data Science Projects

We've identified seven pivotal pre-commit hooks beneficial for data science endeavors, each serving a unique purpose from preventing the commit of large files to ensuring code security and consistency.

Note that each hook have different specifications and configurations options, so we invite you to look into the documentation of each for more customization.

1. check-added-large-files

GitHub imposes restrictions on the file size permissible in repositories. If you try to add or update a file exceeding 50 MiB, Git will issue a warning. Despite this, the changes will still be pushed to your repository successfully. However, to reduce potential performance issues, it might be advisable to undo the commit.

This hooks prevents adding large files such as dataset dumps from getting committed, saving time and effort and establishing data management best practices.

2. check-json

If you have small JSON files in your project such as configuration, settings or mapping files that you could be changing, you can use this hook to ensure JSON syntax correctness.

3. requirements-txt-fixer

Organizes and cleans up requirements.txt files, ensuring that your project's dependencies are clearly and consistently declared.

4. detect-secrets

Data science projects often require the use of sensitive information, such as API keys or passwords. This hook helps to prevent the accidental commit of secrets, scanning for and flagging them before they make it into the repository. Using a secret manager should typically prevent any issues, but occasionally, during proof of concept (POC) stages or exploratory work, this precaution could be overlooked.

5. sqlfluff

For projects involving SQL, this linter and auto-formatter can ensure that SQL scripts are both stylistically consistent and adhere to best practices. This is particularly useful in data science projects that interact with databases.

6. ruff-pre-commit

Ruff stands out as an incredibly swift linter and code formatter, crafted in Rust, and it is set to replace an extensive array of tools including flake8, autoflake, isort, and black. It is quickly establishing itself as the primary linting tool within the Python community, with the expectation that it will gradually supplant older utilities. Major projects like FastAPI, pandas, and Apache Airflow are beginning to adopt Ruff, signaling its rising prominence and adoption.

7. bandit

A security linter from PyCQA, bandit scans your Python code for common security issues. ML projects often process sensitive data, making security a top priority.

Incorporating Hooks into Your Configuration

The following snippet provides a blueprint for setting up your .pre-commit-config.yaml file with the hooks mentioned, ensuring a robust setup for your data science projects:

repos:

- repo: https://github.com/pre-commit/pre-commit-hooks

rev: v4.5.0

hooks:

- id: check-added-large-files

args: ['--maxkb=50000'] # Set maximum file size to 50 MB

- id: check-json

- id: requirements-txt-fixer

- repo: https://github.com/PyCQA/bandit

rev: 1.7.8

hooks:

- id: bandit

args: ['-r']

- repo: https://github.com/Yelp/detect-secrets

rev: v1.4.0

hooks:

- id: detect-secrets

- repo: https://github.com/astral-sh/ruff-pre-commit

rev: v0.3.5

hooks:

- id: ruff

types_or: [python, pyi, jupyter]

args: [--fix]

- id: ruff-format

types_or: [python, pyi, jupyter]

- repo: https://github.com/sqlfluff/sqlfluff

rev: 1.4.5

hooks:

- id: sqlfluff-lint

args: [--dialect, "snowflake"]

- id: sqlfluff-fix

args: [--dialect, "snowflake"]Using Pre-Commit in Your Commits

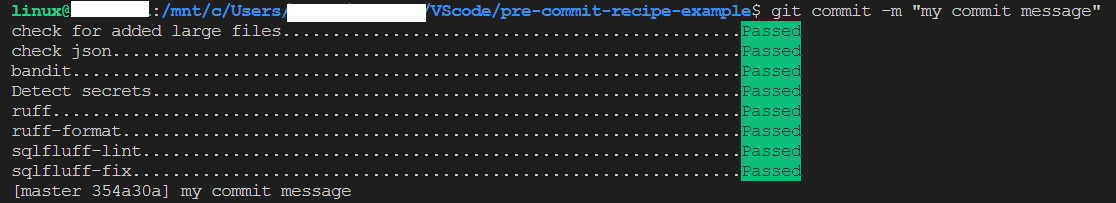

Once configured, pre-commit runs automatically before each commit, flagging any issues for correction. This process ensures that only polished, “error-free” code makes its way into your repository.

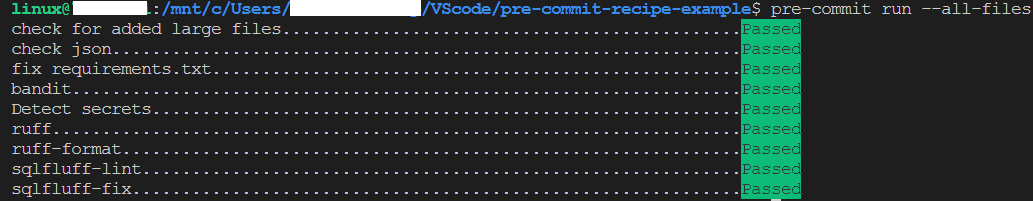

To manually run pre-commit on all files in the project, you can use:

pre-commit run --all-filesThis command is especially useful for cleaning up an existing project or checking that all files comply with your configured hooks.

Note that some hooks might make changes to your files in order to fix formating issues. You’ll need to add the new changes before re-running the pre-commit or committing your final changes.

Beyond Code Quality: Enhancing Workflow and Collaboration

Pre-commit doesn't just refine your code; it streamlines your entire development process. By intercepting issues early, it minimizes the need for later fixes, allowing a smoother progression of feature development. It's akin to the culinary practice of preparing all ingredients before cooking, setting the stage for an efficient and enjoyable experience.

Moreover, pre-commit fosters a culture of quality and collaboration in team projects. It ensures that all contributors adhere to the same high standards, resulting in a cohesive and maintainable codebase. Integrating pre-commit into your data science projects not only elevates your code quality but also embodies a commitment to excellence, efficiency, and teamwork—the cornerstone of successful data science ventures.

Closing Note

While pre-commit hooks serve as a foundational tool to improve code quality and streamline development workflows in data science projects, recognizing their limitations opens the door to a broader spectrum of alternatives that can adapt more closely to a project's unique requirements and team dynamics.

Alternatives such as real-time linting in Integrated Development Environments (IDEs), automated checks through Continuous Integration (CI) services, and the nuanced feedback from manual code reviews, present versatile options for those seeking greater flexibility or dealing with specific challenges. These options not only allow for a more customized development approach but also enhance workflow efficiency and foster stronger collaboration within teams.

Embracing this diversity of tools and techniques, much like the art of cooking, is about choosing the right mix to bring out the best in your projects—crafting masterful data science creations that stand out for their precision, innovation, and quality.